Introduction: The Problem of Probability

Artificial intelligence is not electricity. It is not aviation. It will never be an invisible utility that we simply trust to work in the background. By design, it is a probability machine: a system that guesses convincingly, fails unpredictably, and hallucinates with absolute confidence.

We are already entrusting this machine with high-stakes decisions — approving loans, screening résumés, interpreting medical scans, even piloting vehicles. The question is no longer whether AI can be trusted. It cannot. The question is whether it can be contained.

The Nature of the Risk

Unlike previous waves of technology, AI does not fail in predictable ways. It does not “break” so much as it drifts, fabricates, or amplifies bias at scale. Its errors are not bugs in code; they are features of the statistical methods themselves.

This makes AI uniquely difficult to govern. A system trained on incomplete data will confidently reproduce systemic bias. A model optimized for efficiency will ignore ethical nuance. And because machine learning systems evolve with new data, oversight cannot be a one-time approval — it must be continuous.

The result: AI governance must accept that failure is not rare, but inevitable. The task is not to create trust in the machine. It is to limit the blast radius when the machine fails.

The Expanding Attack Surface

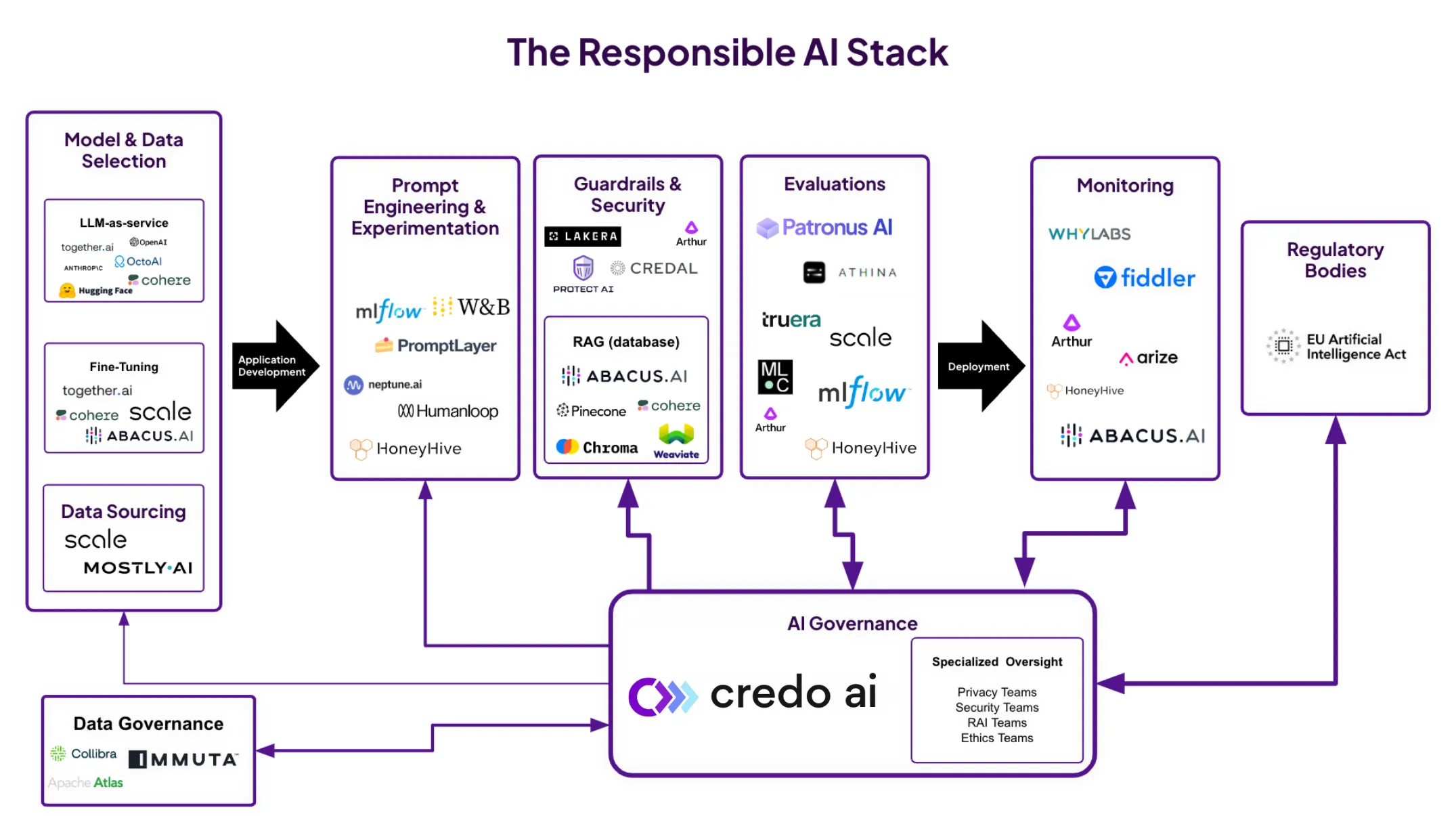

AI is not a single system but an ecosystem — and each layer introduces its own risks:

- Autonomous systems (self-driving cars, drones, industrial robotics) fail in physical space. Their mistakes are measured in human lives. Unlike traditional IT systems, they don’t fail quietly. They fail catastrophically.

- Machine learning pipelines expand the surface for data corruption and manipulation. Poisoned training data, model inversion, and adversarial inputs can compromise outputs at scale.

- AI agents compound these risks by chaining actions across systems — APIs, financial ledgers, IoT devices. A single prompt injection can cascade into real-world consequences without human oversight.

Security and governance cannot be separated here. The design of oversight mechanisms is itself a security posture. Failing to govern means creating vulnerabilities. Failing to secure means governance has no anchor.

Governance as Containment

We must reframe governance not as a promise of safety, but as a containment regime: a way to manage AI’s inevitabilities rather than pretend they don’t exist. Imagine you’re designing a dam. You don’t assume the flood will never come — you design for overflow, structural cracks, worst-case surges. Governance for AI must do the same.

- Transparent failure plumbing: disclosing not just successes, but failure modes, data distributions, drift metrics, and known adversarial vulnerabilities.

- Named stewards: you cannot say “the model” failed. You must name the humans and teams legally responsible, with escalation paths and accountability backstops.

- Operational bias metrics: fairness isn’t a PR checkbox. It needs dashboards, regressions over time, population subgroup tracking, anomalies flagged.

- Security baked in: adversarial red-teaming, prompt injection resistance, model theft prevention — these are not add-ons; they must live inside design.

- Policy convergence: competing regulatory regimes across the EU, U.S., Asia will fracture deployments unless governance scales across borders with shared norms.

Governance under this frame is not a box to check. It’s the architecture of containment — the design of limits, not illusions of control.

This is not a checklist; it is an operating philosophy. Governance must be understood as the architecture of containment, not the illusion of control.

Artificial intelligence will never be fully trusted. It is, and will remain, a probability machine that fails in ways no human fully anticipates. But that does not make it ungovernable.

The task of governance is not to make AI safe. It is to make AI survivable — to build guardrails strong enough that when systems fail, societies, markets, and institutions do not collapse with them.

This requires us to be explicit: what we are doing, and how we are doing it. Governance must be operational, transparent, and inseparable from security. Anything less is theater.

The leaders who accept this — who govern AI as the untrustworthy system it is — will not only contain risk. They will set the standard by which the rest of the world measures progress in the algorithmic age.

“This is not a checklist; it is an operating philosophy. Governance must be understood as the architecture of containment, not the illusion of control.”

This blog consistently delivers exceptional content that sets it apart from the rest. The author's keen understanding of the latest fashion and style trends

Thank you! I’m so glad the post was helpful to you.

Just keep going! I’m waiting for the next post from you

Whether you're seeking the latest runway looks or everyday style inspiration, this blog is your go-to source for all things fashion and style.

I am truly grateful for your kind words. It is incredibly rewarding to know that my content has resonated with you and provided value

Your feedback inspires me to continue creating high-quality, informative

Subscribe my newsletter to get the latest posts delivered right to your email.